When Cambridge Analytica used Facebook data to identify the personalities of American voters to influence their behavior they were both violating the letter of Facebook’s rules and taking Facebook’s business model to its logical next step.

Facebook, after all, is designed to collect data from its users, share that data with those who want to send messages to them (advertisers), and give those partners the tools to target audiences by their demographics and psychographics.

That’s how Facebook made $16 billion in profit on revenues of $40.7 billion in 2017. And that’s how the whole digital advertising ecosystem works; it’s a $330 billion market worldwide.

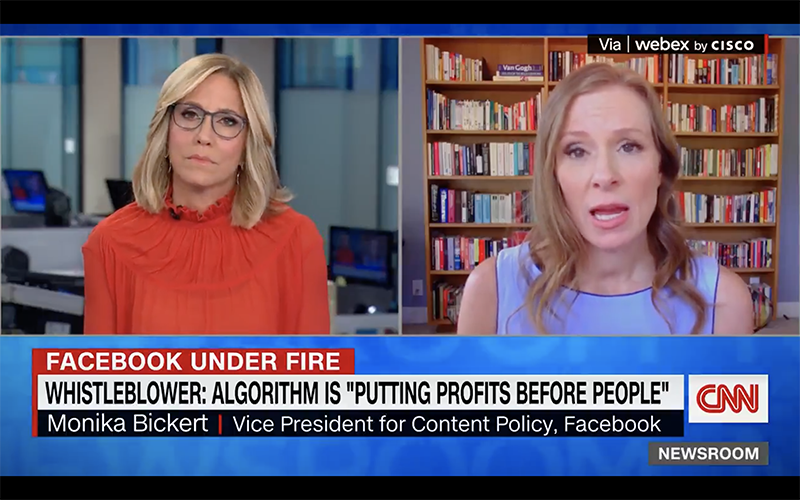

The revelations about how Cambridge Analytica exploited Facebook raise a host of questions about both companies. Most pressing for users of Facebook: its lack of oversight and transparency betray a company that seems to care more about its business partners than its users. The problem is not unique to Facebook.

The issue can be traced back to the original sin of the digital era: ad-supported services that offered free access in exchange for personal data. While Facebook and Google have dominated digital ad sales using that model, the rest of the online publishing industry has floundered. Today digital publishers are attempting to pivot to a subscription model, forced to convince readers that what they once got for free is actually worth paying for.

The ad-driven business model made consumers into second-class citizens as digital publishers optimized their services for advertisers rather than for the customer experience. Their message to consumers: your data is not personally identifiable, “personalized” ads are actually a benefit, and, after all, what do you expect to get for free?

The Facebook hack blows up this logic and makes the real tradeoff painfully clear. Whether it’s in the context of political persuasion or selling packaged goods, the business of online advertising is in combining first-party data – information you either give or that can be gleaned from your actions – with third-party data that can be bought from other providers, to create a detailed picture of who you are. In the case of Facebook, whose ad-targeting system is one of the most sophisticated in the world, the issue was exacerbated by non-Facebook apps on the site that collected user data and the data of users’ friends. That’s what led to the Cambridge Analytica crisis now enveloping Facebook.

Every online publisher and social platform that sells advertising faces the same balancing act: the need to monetize data about their customers while at the same time protecting that data. Consumers are caught in the middle, left with little more to rely on than a leap of faith.

Help is on the way in the form of Europe’s General Data Protection Regulation, set to go into effect in May. While the regulations are aimed at companies doing business in the European Union, the implications are global, and will certainly have a significant impact on how America’s Big Tech companies operate.

GDPR will ensure that consumers consent to companies collecting and using their information. It will restrict what types of personal data companies can collect, store and use in the EU and will regulate the export of such data outside the EU. Companies that do not comply could face fines of up to 4 percent of their annual revenue or 20 million Euros, whichever is greater.

The Cambridge Analytica hack of Facebook would surely have drawn a massive fine for Facebook under GDPR, had it already been in effect. Even in the U.S. there is speculation that the Federal Trade Commission may have cause to fine Facebook amounts into the billions, depending on what the facts turn out to be. (Facebook settled an earlier investigation by the FTC by signing a consent decree in 2011 that required it to alert consumers when their data was improperly acquired by others.)

The exploitation of Facebook is sensational, but at root it is the exploitation of a system that is just a more sophisticated version of those at almost every ad-driven web service. Consumers clearly need to rely on more than good faith to protect their interests. GDPR will set the technical bar; who will set the ethical one?

Facebook, GDPR, and the Perils of Online Advertising

The revelations about how Cambridge Analytica exploited Facebook raise a host of questions about both companies. Most pressing for users of Facebook: its lack of oversight and transparency betray a company that seems to care more about its business partners than its users. The problem is not unique to Facebook.