I never thought I would question where ‘thought’ came from. All my life, there was only one source for opinion and creative thinking–the human mind. That changed with the invention of ChatGPT and other forms of generative AI. For the first time in human history, society will interact with thoughts and ideas not formed by people. So, what does this mean?

The “positives” we could focus on are its usefulness for generating reports and recaps based on technically objective information. But that is a very short-sighted view of what this AI can do. We need to understand that that’s not the end of the parameter or where the problems exist.

In actuality, generative AIs are in the business of imitation and deception. Specifically, the imitation of human likeness through thought, thus deceiving its readers. From a social health standpoint, the implications are worrying at best.

Society’s intellectual nucleus is founded on the legacy of human thought. The information generated by AI made to imitate human thought holds the potential to corrupt an already fragile social climate as it’s not based on human experience, aka the root of the idea.

Now, we could say that AI cannot technically create new thoughts if it’s pulling its information from sources written by human beings. And at this point–I agree. But over time, as this AI generates and publishes “thoughts” and information globally, it will begin to pull from sources not created by humans. That’s where the threat to our social sphere comes into play. Once AI starts to pull from AI, there begins a decline in the potency of human thought.

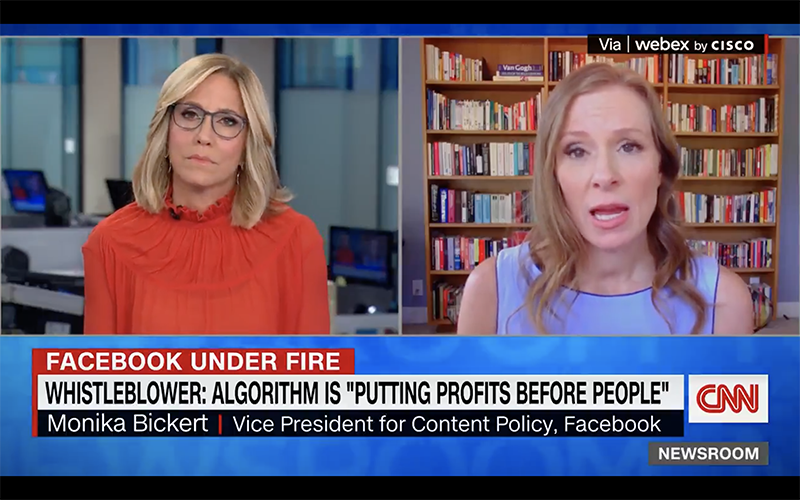

Another point of contention is the idea that this kind of AI would be used, if not at least marketed, as a genuinely unbiased and objective fact checker. Here’s why that’s problematic: any form of AI is created by a person–meaning it comes with that person’s unconscious biases. Therefore, it is just as impossible for it to be truly neutral, no different than a human. Never mind that the idea implies that we would depend on a computer to “tell us the truth.” Furthermore, since it’s an imitation of human thought, where does ethical and moral judgment come into play? As it can’t create its judgment through its version of the experience, the concept of judgment must be programmed via the parameters of its creator. This means that an AI could be programmed to agree with any archaic ideology that would further lead to the corruption of the social sphere, especially if given the title of “fact checker.”

Unfortunately, unlike deep fakes, generative AI is easy to use and find. As far as you know, I could have co-authored this with one. This points out another way this technology is deceptive. Currently we have no way to tell if the words we ingest are the product of a human behind a keyboard. We’ve never had to ask the question before. Now that we do, we need an identifier for what is and isn’t human thought.

Simply put, ChatGPT, and other forms of generative AI, are no more than deep fakes of human thought. Except imitating and deceiving with ideas instead of a face.