The day after Valentine’s Day, Tesla released a “Robot Dating” video that generated 4 million views in a day, suggesting rather oddly that you “find your perfect match” with one of its industrial robots.

But humans are already dating robots, or at least dating artificial intelligence (AI) programs. You don’t need to watch the movie “Her,” or listen to Atlanta Rhythm Session’s “Imaginary Lover” for examples. The “chatbot” company Replika claims over a half million monthly users of its customizable companions, and co-founder Eugenia Kuyna says that about 40 percent of them think of the app as a romantic partner. One user calls Replika “…the best thing that ever happened to me.” Apparently, humans can “love” a piece of software that is constantly responsive, endlessly attentive, and adaptive to our personal needs.

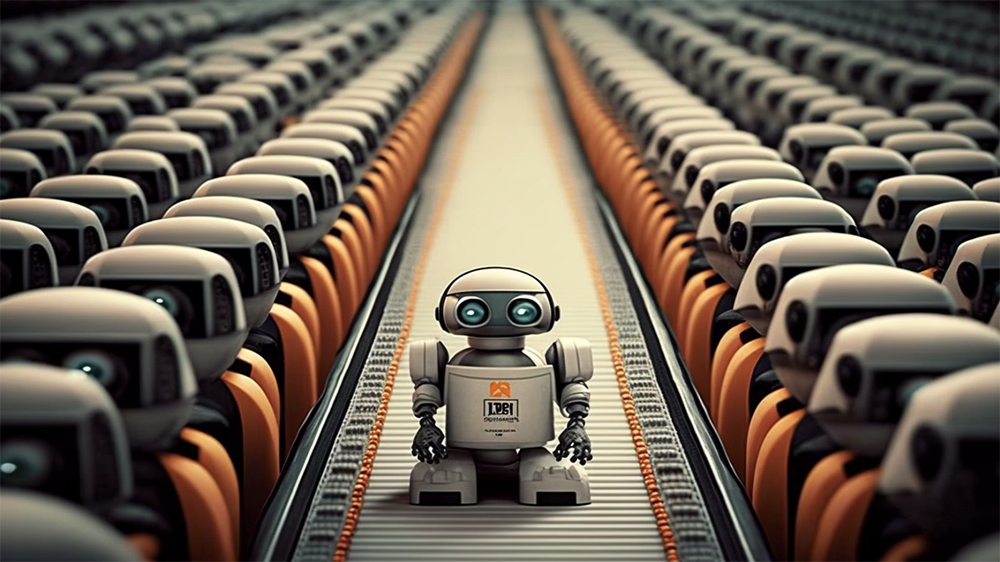

But will we love a trillion robots?

Suppose that we decide to grant personhood to software. We are a gullible species, easily falling for algorithms masquerading as people on social media. If a knowledgeable software engineer can come to believe in AI sentience, it’s quite likely that drumbeat for AI personhood will rise as we embed AI coaches and therapists into our lives, inevitably falling for the Eliza effect. The European Parliament is recommending how and when a robot should be considered sentient (and an Idaho lawmaker recently introduced legislation forbidding AI to attain personhood). Just as there is a Society for the Prevention of Cruelty to Animals, there has been one for robots since 1999. While the first robot citizen is widely considered a marketing gimmick, what happens to human systems when there are a trillion “person programs”?

Once autonomous software can write autonomous software, the only hard-coded limits will be those we initially impose. But even those baked-in guardrails aren’t likely to survive wave after wave of software auto-rewrites. Give a software generation tool enough storage and processing power, and point it at a lot of the tasks that humans are already doing, and the sheer scale of what our digital tools can create is quite literally unimaginable.

Our potential AI dystopia won’t come so much from the kinds of work that our technology could do, or where it will appear. It’s how many there can be.

Picture a Silicon Valley version of Goethe’s Sorceror’s Apprentice creating unlimited numbers of digital brooms. What happens if the all-powerful Sorcerer never returns to rein in those exponential creations, as the software robots expand without limit into the workplace and beyond?

We’ll rapidly find that our human systems are quite vulnerable to this kind of technology at scale. Let’s say we award a patent to a set of algorithms. Then we design algorithms that can create patent-generating algorithms, designed intentionally to pass the “unique invention” sniff test. Press a button, and now there are a trillion of them. At the very least, our human filtering systems are flooded, and the percentage of actual human-created inventions becomes a rounding error.

Limitless scale is a unique problem for our monkey minds. We can only make so many physical robots. But software isn’t just eating the world. Code writing code has the capacity to overwhelm entire human systems in a race of our own technology against our own technology, with humans relegating themselves initially to being referees, and eventually bystanders. First they’re our tools. Then they’re our equals. Then there are a trillion of them.

Once we create self-interested AI, we won’t put the genie back in the bottle, because there won’t be a bottle. There will simply be far more digital agents than there will be of us. I don’t worry about Skynet: I worry about The Sorceror’s AI.

So how can we fix it, before it happens?

First, we need to perpetually challenge any belief in the infallibility of software. Our fractal human flaws inevitably foster stunningly fallible and opaque algorithms. Our software is not automatically an egalitarian friend, and our cognitive biases guarantee capricious applications. Every single software engineer needs early ethics training to ensure their work is deeply rooted in empathy and altruism — for humans, not robots. One researcher wants to encourage “loving AGIs,” but there’s no way to guarantee that all AGIs will be loving.

Second, we must beware of false AI equivalency. Our language is already sliding into a dangerous realm with the advent of cobots and human-machine partnerships in the workplace. We never talked about “human-plow partnerships.” A pen is a tool for self-expression — but I don’t partner with a pen. Elevating robots and software inevitably diminishes humans. Let’s keep these tools in their Pandora’s box.

Third, we need self-limiting open-source widgets that companies must embed in their software to provide guardrails for scale. Self-replicating software needs self-replicating kill switches.

Finally, we need an ecosystem of agreements, from self-regulation to single-society laws to worldwide ethics standards, specifically focused on managing the scale and complexity of our technological progeny. We already have global agreements to keep virulent biological agents from escaping into the wild, and to constrain the use of gene-modification technology to hack humans. We need the same for our future weapons of mass software production.

There’s no reason we shouldn’t enjoy one or two cuddly robots in our lives. They’re likely to become as omnipresent as our digital distraction devices, i.e. cellphones.

But I don’t think we will love a trillion of them.

—

Gary A. Bolles is the author of The Next Rules of Work, and the Chair for the Future of Work for Singularity University.