Despite its growth and popularity, AI is not as widely adopted as it could be—particularly by small- and mid-size businesses that lack the enormous data sets of business and tech giants. One reason is that AI designers are not doing enough to consider the end user.

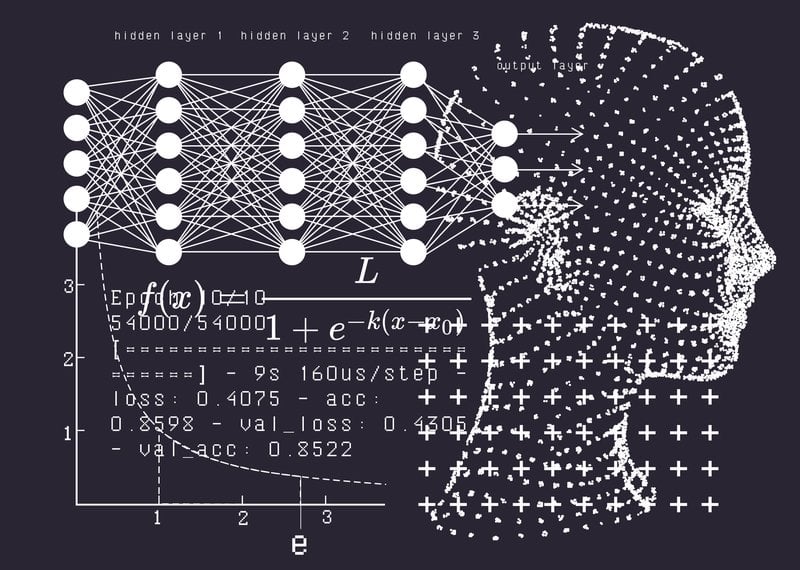

Consider the many stages an AI solution must pass through in order to be successfully integrated into a company’s workflow. An algorithm begins with an AI expert who must incorporate knowledge from a subject matter expert. Then the tool needs approval from leadership, and has to be adapted by users. Too often, our algorithms do not reflect the complexity each stakeholder brings to the table. Every one of those steps may require the solution to meet a different need.

Take for instance an algorithm used for hiring. The subject matter expert who knows what job criteria matters might need to provide relevant data for the AI, like the neural network, to learn from. Then the HR and executive leaders who must approve the tool may want to know how the algorithm combats bias, whether it can help them find non-obvious candidates, and even how to compare similarly ranked candidates. (For example, potential bias might be reduced by ensuring an applicant’s years of experience “count” more than the name of their college.) Meanwhile, end users will care about ease of use and whether they get what they’re looking for when they use it.

It’s a lot to ask of one tool, but we can design for it. This is where fuzzy logic, a branch of mathematics that has been around since the 1960s, can help. In essence, a fuzzy classification algorithm allows us to communicate grey when employing a neural network alone might show only black and white. Fuzzy logic is designed for uncertain data; it is designed to describe the degree to which something is true. Exploiting these and other fuzzy properties allows us to build transparency and explainability so that we can not only see an outcome, but understand why it emerges – in human terms.

Let’s look at a basic example: a mug of hot chocolate. Too hot and you’ll burn your tongue. Too cool and it no longer warms you on a winter day. But there’s not just one drinking temperature for hot chocolate—there’s a window of temperatures, a range within which it’s ideal. If we were trying to quantify this scenario by just using the 1s and 0s of a typical classification algorithm, we would have to say 1=good to drink and 0= too bad to drink. With fuzzy logic, we can represent the many points between 0 and 1 at which you might enjoy the beverage.

Why does this matter for business? Because for too long we’ve used classification algorithms like neural networks that force a data point into a yes-or-no, 1-or-0 duality. The power of these neural networks is vast—but without blending in other mathematics like fuzzy logic, our ability to explain how they work is limited. Neural networks excel at uncovering patterns, but they don’t account for nuance, and they are unable to notice when they don’t notice something. Unlike humans, they lack meta-cognition, so the fact that we can’t see how the algorithm reaches its conclusion is problematic. When we combine fuzzy logic, however, we can better uncover the fuzzy relationships that show the “degree” of each data point. The software doesn’t have to code the hot chocolate as either hot or cold–it can code it as warm.

For example, you can determine the ideal amount of perseverance someone would need to succeed in a specific role. Then, using psychometrics, you can find candidates who fit that criteria. Neural networks give us the ability to find the fuzzy patterns in a set of data. Providing our algorithm with perseverance scores (and other metrics) of all VPs of sales, for example, it can find the degree of impact (or fuzzy membership) of the score across the perseverance spectrum.

As AI designers, we need to remember that not every tool is right for every problem. We need to ask: “What problem are we trying to solve? What matters to the people involved? Who are the end users?” As the saying goes, to someone with a hammer, everything looks like a nail. Neural networks have recently been our hammer. It’s time to mix in a new tool—fuzzy logic—and discover how to create more elegant solutions.

—–

Bryce Murray, PhD, is Director of Technology and Data Science at Aperio Consulting Group, where he builds algorithms to operationalize emerging technology and scale people analytics.