Amazon, Apple, Facebook, Google, and Microsoft all seek a major role in healthcare, presuming that large amounts of data about people and environments will enable rapid advances in patient care, systems management, and disease detection and prevention. But just as they move in that direction, distrust is rapidly growing about how they treat personal data and how they control and govern their increasingly gigantic systems. And some early moves in healthcare by the tech companies have been clumsy, sloppy, or worse. With personal health data probably the most sensitive type there is, missteps by these companies could have outsized impacts on their image and businesses.

“In the digital age, your data IS you, and you should control it,” says Dr. Deborah Peel, founder of Patient Privacy Rights, a not-for-profit advocating for patients’ rights. Her opinion is widely shared both in the medical community and among the general public. A couple of the tech giants, and a growing number of startups, agree with her, too.

Each of the five tech giants have made substantial investments in healthcare over the past 10 years, with mixed results. Some have surreptitiously traded in consumer data in ways that until recently elicited little backlash. Now the companies are gaining traction by forging strategic partnerships that often involves data sharing, with medical schools, government agencies, hospitals, big pharma, and biotech companies. But even as they help fuel innovation, they are, in general, fiercely resisting new restrictions on their access to and use of healthcare data. That’s partly because there’s so much money to be made. The global market for health data could reach $68.75 billion by 2025, according to BIS Research.

Facebook, Alphabet’s Google, and its Verily healthcare subsidiary are all hoping to capture a piece of that market by relying on what’s called “de-identification” to protect patient-consumer privacy. The process ostensibly removes individually identifiable aspects of the data. But researchers say that de-identification does not reliably protect patient data. Researcher Boris Lubarsky wrote in the Georgetown Law Review last year that with the proliferation of publicly available data online as well as increasingly powerful computers, “scrubbed data can now be traced back to the individual user to whom it relates.”

And while the companies have proprietary algorithms to de-identify, anonymize, and depersonalize personal data, there are no established standards or organizations that can objectively verify that it is actually de-identified or whether it may be vulnerable to re-identification. Dr. Chris Culnane, a research fellow at the School of Computing and Information Systems, University of Melbourne cautions that “there is very little information about how the data is being protected, or why we should believe it to be anonymized.” He adds, “Google and Facebook…possess arguably the largest datasets in existence. The quantity and granularity of data they possess about a large portion of the public poses a significant re-identification risk.”

Amazon’s most dramatic healthcare effort involves its own employees. Along with joint venture partners JPMorgan and Berkshire Hathaway, it will offer insurance and clinical care to a combined base of more than 1 million employees. This could give the companies access to employees’ personal health information to study inefficiencies and medical errors and to model valuable new approaches for lowering costs and improving quality of patient care. However, Ifeoma Ajunwa, assistant professor at Cornell’s Industrial and Labor Relations School, believes this access to employees’ personal information could compromise their welfare: “Insufficient protections for employee health data leave workers vulnerable to privacy invasion and employment discrimination.”

All five tech giants have made substantial investments in healthcare over the past 10 years, with mixed results.

Facebook and Google’s record in healthcare so far does not seem designed to inspire patient trust. Some of those companies’ efforts to acquire patient data have violated long-established health data and consumer protection policies:

- Google/Alphabet’s DeepMind bought 1.6 million patient records with personally identifiable information from the National Health Service in 2016.

- Facebook in early 2018 asked several hospitals to share anonymized patient medical records, and a number agreed. Facebook planned to match it with its own user data so it could re-identify patients and help the hospitals figure out which ones might need special care or treatment. Facebook paused the project in the wake of the Cambridge Analytica scandal. It is unclear whether it will resume.

- Facebook also admitted it allowed third-party marketers to download the names and contact information of Facebook users, including those in private or closed groups. Members of a support group for women carrying the BRCA (breast and ovarian cancer) gene grew suspicious when they started receiving targeted ads. Facebook claims it has closed the privacy loophole that gave marketers access.

- In November 2017, Facebook announced it was expanding existing suicide prevention. It uses artificial intelligence software to scan posts and live videos for threats of suicide and self-harm, and alerts human reviewers, who can contact emergency responders if needed. In order to determine whether someone needs help the company trains its tools at posts and videos in great detail. It has not said what restrictions it will place on the data about individuals acquired this way.

Facebook and Google’s tactics feel familiar because they dovetail with their basic business model—personalized advertising. Google, Facebook, and Amazon all collect and analyze personal health data as an extension of their thriving core businesses. They target consumers with disease-specific and other health- and wellness-related ads, and also did so until very recently in so-called private patient forums.

But unlike the data used to target other consumer ads, healthcare data carries the weight of regulations, notably Health Insurance and Portability and Accountability (HIPAA) patient data privacy regulations. First enacted in 1996, HIPAA has lost its teeth as data science and analytics have rapidly progressed. “Data scientists can now circumvent HIPAA’s privacy protections by making very sophisticated guesses, marrying anonymized patient dossiers with named consumer profiles available elsewhere with a surprising degree of accuracy,” says Adam Tanner, author of Our Bodies, Our Data.

Apple has taken a different approach. In April, CEO Tim Cook took shots at Google and Facebook saying, “Privacy to us is a human right…[Apple] could make a ton of money if our customers were our product.” Instead, Apple has quietly forged ties with hospitals and developers, creating an API that allows patients to securely view their electronic health records on their iPhones.

Colin Buckley, an analyst at health data consultancy Klas, says Apple has sidestepped the trickier privacy concerns by not taking possession of patient information. “Today only Apple has made real progress towards becoming part of patient/provider relationships,” he says. “They simply broker the transfer of it directly to and from patients’ phones and healthcare organizations’ electronic medical records. It’s the patients that decide who they want to share their data with and when.” This policy will probably aid in consumer acceptance of Apple’s newest watch, announced in mid-September 2018, which includes built-in, FDA-approved electrocardiograms as well as other heart monitoring tech.

Similarly, Microsoft is taking a pro-consumer position, says Firas Hamza, the company’s digital transformation advisor for healthcare.“I don’t think providers can afford to sit back and keep the power of all that patient data in their own hands,” he says. “I think we’re headed to a new democratic healthcare system where the consumer shares power with the payor and the provider.”

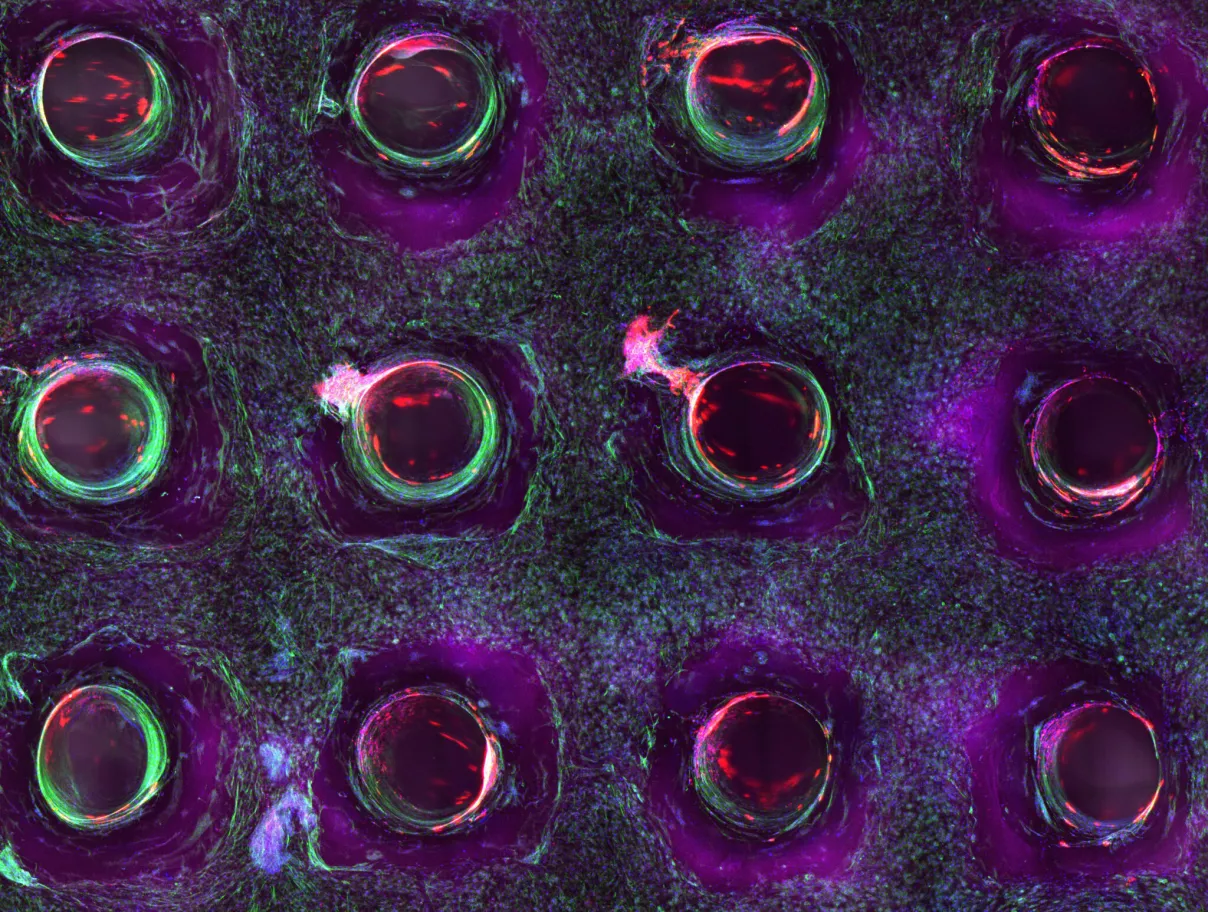

The tech giants are challenging a system that many already consider badly broken. It’s good that they want to make it more efficient. And the responsible use of big data can certainly lead to good outcomes. For example, the Michael J. Fox Foundation, the NIH and tech partners including Verily are building biobanks of data generated by patient-volunteers living with Parkinson’s disease to help research for new therapies to progress more quickly.

There are many such examples of data leading to positive innovations, a point that the tech giants make often to defend their tactics. “You want to know how tech companies can get consumers’ trust? Save their lives, save their children’s lives,” said John Nosta, an advisor to many of the big tech companies.

And the big tech companies are not alone in trying to disrupt the system. The industry is rife with startups, some of which are rethinking how consumers can control their data and even share in the opportunities that it creates. Linda Avey is co-founder of Precise.ly, a precision health startup that is building a new data model to empower patient-consumers by giving them control over their health data. Precise.ly also proposes that people receive a form of compensation for sharing their personal health data for use in medical research. In a recent blog post Avey wrote, “We all need to focus on building trust with the public, something that’s been eroding…individuals need to be the ones deciding where and how their data are shared and with whom, not companies, researchers, or politicians.”

The consistent thread among most of the new data models, especially those emerging from startups, is empowering patients. Hu-manity.co, a well-funded startup founded by former executives of health data broker IQVIA, for example, says patient data should be respected as patient property and as a human right for each individual. Examining the current patient data landscape in their book, Radical Markets, Glen Weyl, principal researcher at Microsoft Research and Eric Posner, University of Chicago Law School professor, encourage consumers to demand compensation and even unionize. “The current wasteful equilibrium results from the dominance of companies like Facebook and Google that thrive off free user data,” they write. Hu-manity.co, Precise.ly and many other startups anchor their new data models with blockchain-based data repositories, which put control into the hands of the individual.

Meanwhile, Google, Facebook, IBM, and other large companies are lobbying for new federal privacy legislation that puts more emphasis on the importance of innovation than on patient privacy. These companies are working furiously to promote legislation that would water down regulations restricting the use of patient data. California has passed legislation that mirrors the European Union’s General Data Protection Regulation that severely penalizes companies for exploiting consumer data. Tech companies insist that medical innovation trumps the privacy rights of the individual.

In 2016, Alphabet’s U.K.-based AI subsidiary, DeepMind, for example, violated the privacy of as many as 1.6 million patients to help fuel research and new treatments for acute kidney injury. Pitching the project as part of a broader strategy, DeepMind has said, “We’re building an infrastructure that will help drive innovation across the NHS.

Consultant Nosta states more boldly, “There’s no balance between innovation and privacy. Privacy obstructs innovation.”

That’s not how consumers and patient advocates see it, especially as more instances of data exploitation are revealed.

Abigail Christopher is a journalist based in Portland, Oregon.