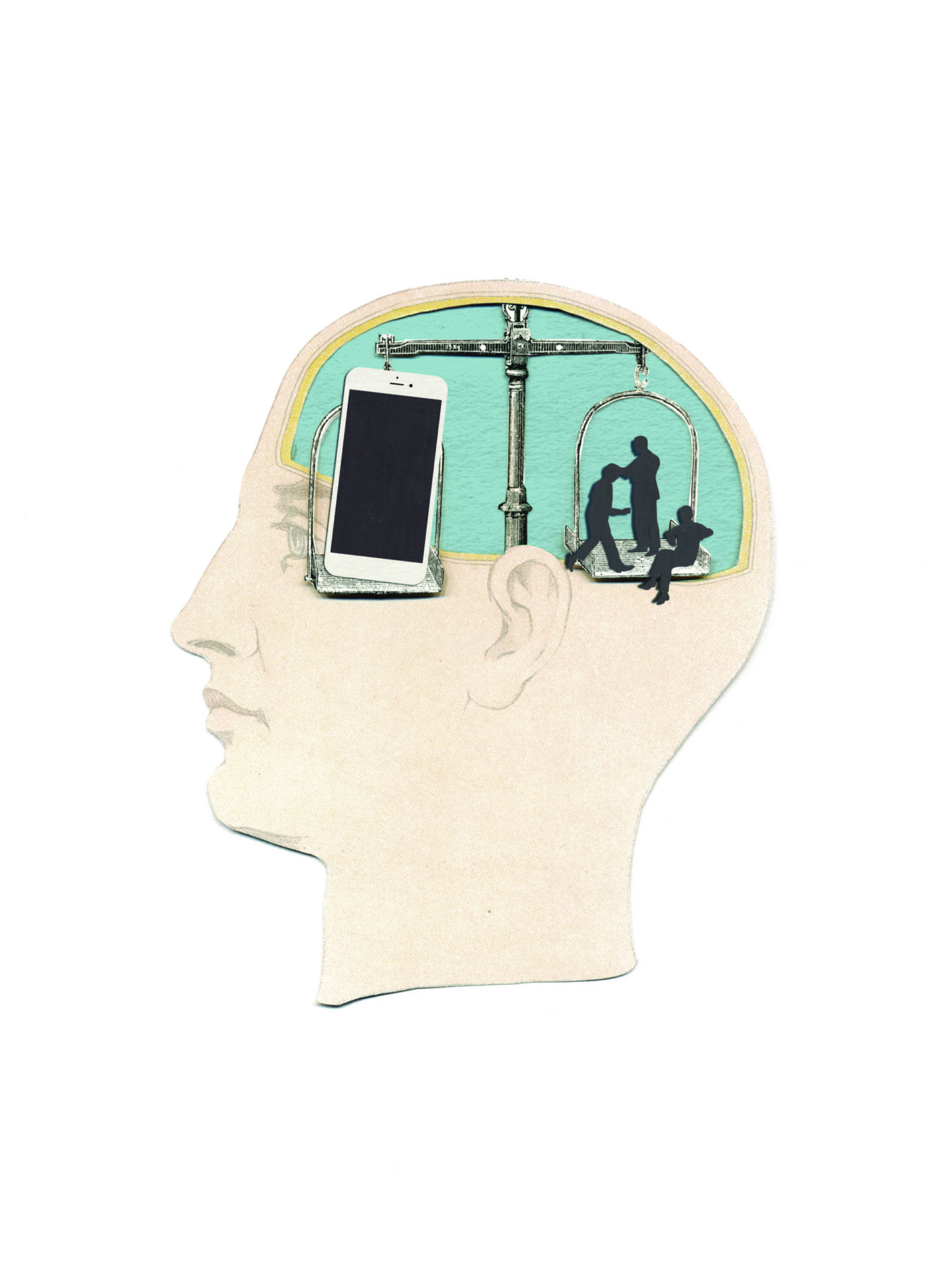

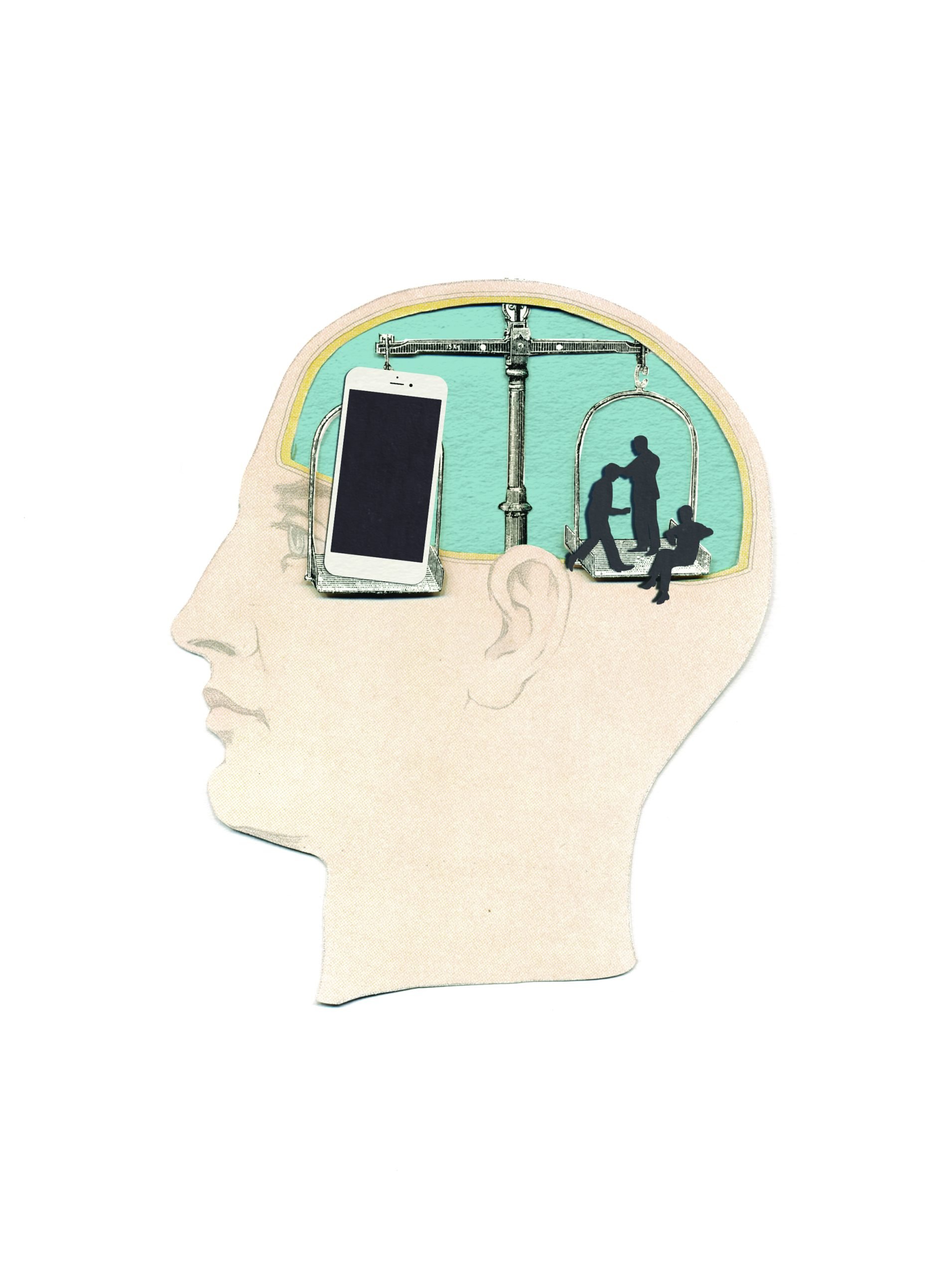

Tech is not neutral. Human values are being coded into our machines and the implications are vast. We can barely keep up with where tech is taking the economy, society, and our institutions. But all computer code embodies to some degree the assumptions and beliefs of its makers. So as technology extends ever further through tools like artificial intelligence, we need to ensure that the people writing the software — the computer scientists — are educated in ethics.

More attention is now being paid to the issue of ethics education for computer scientists, but ethics education so far remains generally inadequate for the challenges we reckon with today. That’s why we are seeing biases, discrimination, and inequities emerge in the digital world that echo what we see in the real one. It shouldn’t be this way.

Algorithmic bias has real-world consequences. It leads, for example, to gender and racial discrimination. Google’s ad software has presented higher paying jobs to men than to women, and has been more likely to serve up criminal justice-related products alongside searches that include names likely to belong to a black person. Latanya Sweeney documented this in her 2013 paper, “Discrimination in Online Ad Delivery.”

Artificial intelligence is also finding its way into the criminal justice system, through predictive policing and machine testimony, often in ways that actively preclude transparency and accountability. It’s one thing to use AI to fight a parking ticket; it’s another when courts use AI to recommend sentencing and bail. It’s clear that our information economy infrastructure is easily manipulated. And when bias is not actively avoided, it can get built into machine learning systems over and over.

Technologists need to integrate ethical concerns into every project. But without an understanding of the impact of their work, can engineers be expected to be socially responsible? Is it even fair to ask them to be?

Thankfully, societal awareness is shifting and a sense of responsibility among programmers is growing. There’s the well-known world of “white hat” hackers, who help detect and prevent cybercrime and attacks. Programmers and coders are starting to refuse to work on certain projects. Others come out as whistleblowers. DJ Patil, who was chief data scientist for the Office of Science and Technology under former President Barack Obama, recently started crowdsourcing a code of ethics for data science. Some Stanford computer science undergraduates created a group called “Stanford Students Against Addictive Devices” and began protesting outside Apple’s headquarters and Apple stores. There is growing research on this topic in academia, and we’re seeing new organizations like the AI Now Institute at NYU, which examines the social implications of AI.

So how do we get more socially responsible software engineers? Ethics should be a core topic in university computer science programs. Some tech companies are trying to develop their own ethics programs to address the fact that few of their engineers have had substantive ethics training. The question of what to teach and how to build a curriculum is complex. Does new technology create new ethical issues? Who defines what is right or wrong? Does industry have a role?

The field of computer ethics has a long history, even if it has not been heeded. It was founded in the early 1940s by MIT’s Norbert Wiener. In 2006 Michael J. Quinn, then at Oregon State University, wrote an important paper entitled “On Teaching Computer Ethics within a Computer Science Department,” and Stanford University has had ethics and technology courses for 30 years.

In order to be accredited by the Accreditation Board for Engineering and Technology, computer science programs are supposed to include programs for “an understanding of ethical issues.” But that requirement is broad and undefined. Many universities require an ethics course for their computer science degrees, but there is little uniformity in what is taught. In some schools, you can fulfill the ethics requirement with a course that doesn’t focus heavily on ethical issues. Some classes are taught in the computer science department (Ethical Foundations of Computer Science, University of Texas, Austin), sometimes they are interdepartmental (Intelligent Systems: Design and Ethical Challenge, Harvard University, co-taught with the philosophy department). The University of Washington offers a course in the communication department called Exploring the Ethical Questions of New Technology, built around episodes of Black Mirror.

There is little doubt that the world needs more explicit, relevant educational programs in ethics for engineers and programmers. And they cannot offer a simple, one-size-fits-all solution to complex problems. The ethical considerations for military applications will be very different from what would apply in medical software. The ethical principles for a companion robot must be different from those for a self-driving car.

Artificial intelligence and machine learning continues its relentless advance; the cyberization of institutions and communities continues; the Internet of Things connects pretty much everything. And now as technology enters our cells and neurons, allowing us to update not just the software in our devices but in our biology, the question of what’s right for society and humanity cannot be left to computer scientists alone. We need an educational system and ethical framework that helps them.

We won’t get it right immediately. But as neurobiologist Rafael Yuste of Columbia University says: “When the medical code of ethics was first created we were still learning…and it’s held up pretty well.”

Imbuing society’s computer code with more ethical frameworks will be an ongoing process, but we need to prioritize it now more than ever.

Simone Ross is the former director of programming at Techonomy Media.

If Society is Governed by Computer Code, How Will Coders Understand Ethics?

As technology extends further through tools like artificial intelligence, we need to ensure that the people writing the software — the computer scientists — are educated in ethics.