Making a generative AI program “hallucinate” is not difficult. Sometimes, all you need to do is ask it a question. While AI chatbots spouting nonsense can range from “charming” to “obnoxious” in everyday life, the stakes are much higher in the medical field, where patients’ lives can hang in the balance. To keep medical AIs from hallucinating, developers might have to do something counterintuitive, and limit what the programs can learn.

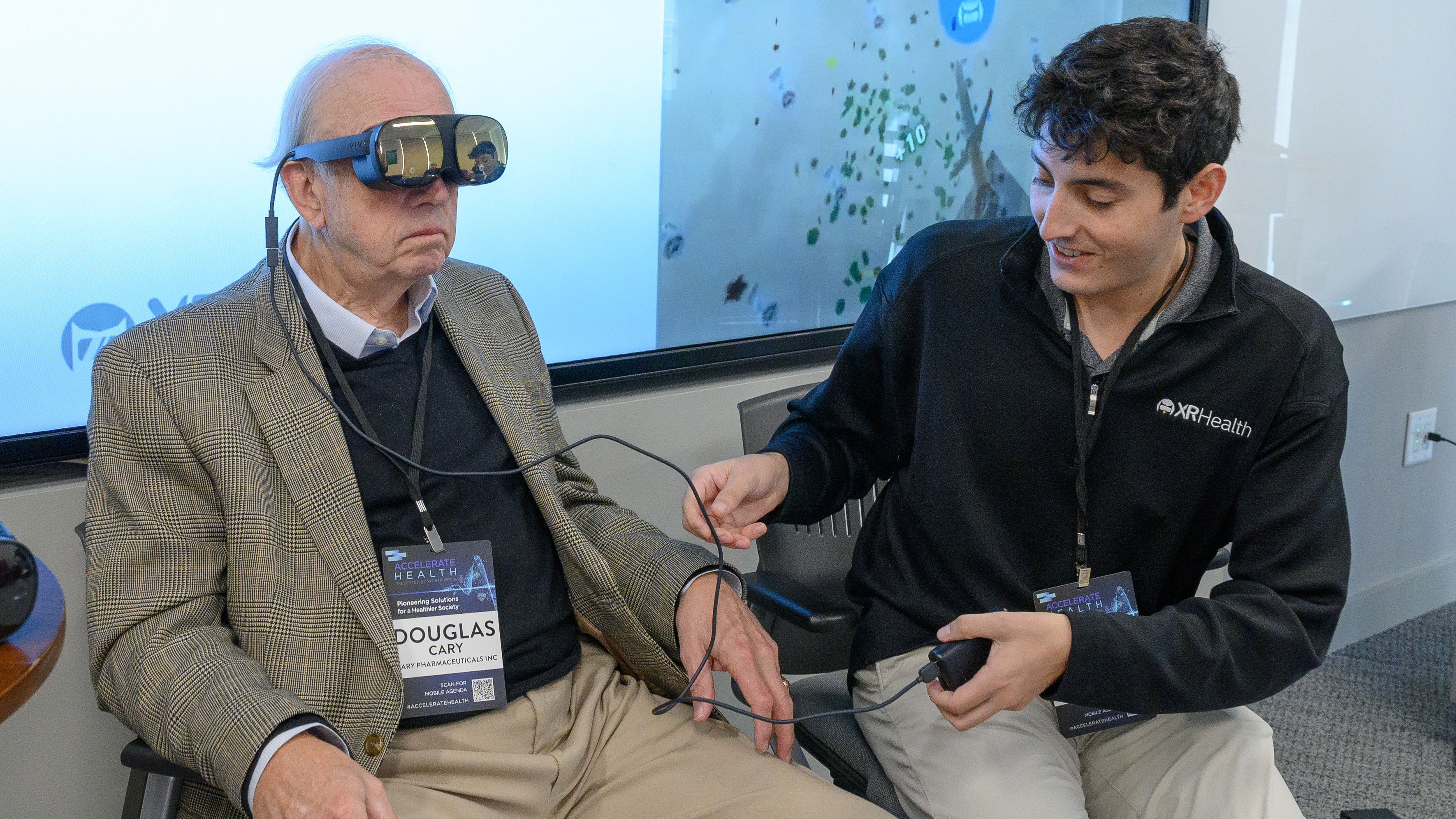

Simon Arkell, CEO and cofounder of Synthetica Bio, touched on this issue during Techonomy’s recent Accelerate Health: Pioneering Solutions for a Healthier Society conference. Arkell joined Wensheng Fan, CEO of Spectral AI, as well as Solomon Wilcots, sports health leader at Russo Partners, for a discussion called “AI’s Medical Odyssey: How Technology Is Rewriting the Healthcare Story.” Naturally, generative AI’s penchant for misinformation was a salient Topic.

To start, Arkell gave a brief refresher on what an AI hallucination is:

“As you may have seen, this technology, if you ask it something about yourself or something you know a lot about, it’s going to come back very confidently and give you the answer,” he said. “And it may be wrong, but it’s going to act really confident, like it’s 100% right.” He then asked the audience to imagine what might happen if a medical AI recommended a “confident but wrong” course of treatment for a lung cancer patient.

However, Arkell also reminded attendees that ChatGPT, Google Bard, and similar programs are not the be-all, end-all of generative AI technology. Part of the reason why they’re prone to hallucinations is because they simply have so much unfiltered information to work with. Reducing a program’s scope and keeping it trained on one particular topic could reduce the risk of misinformation.

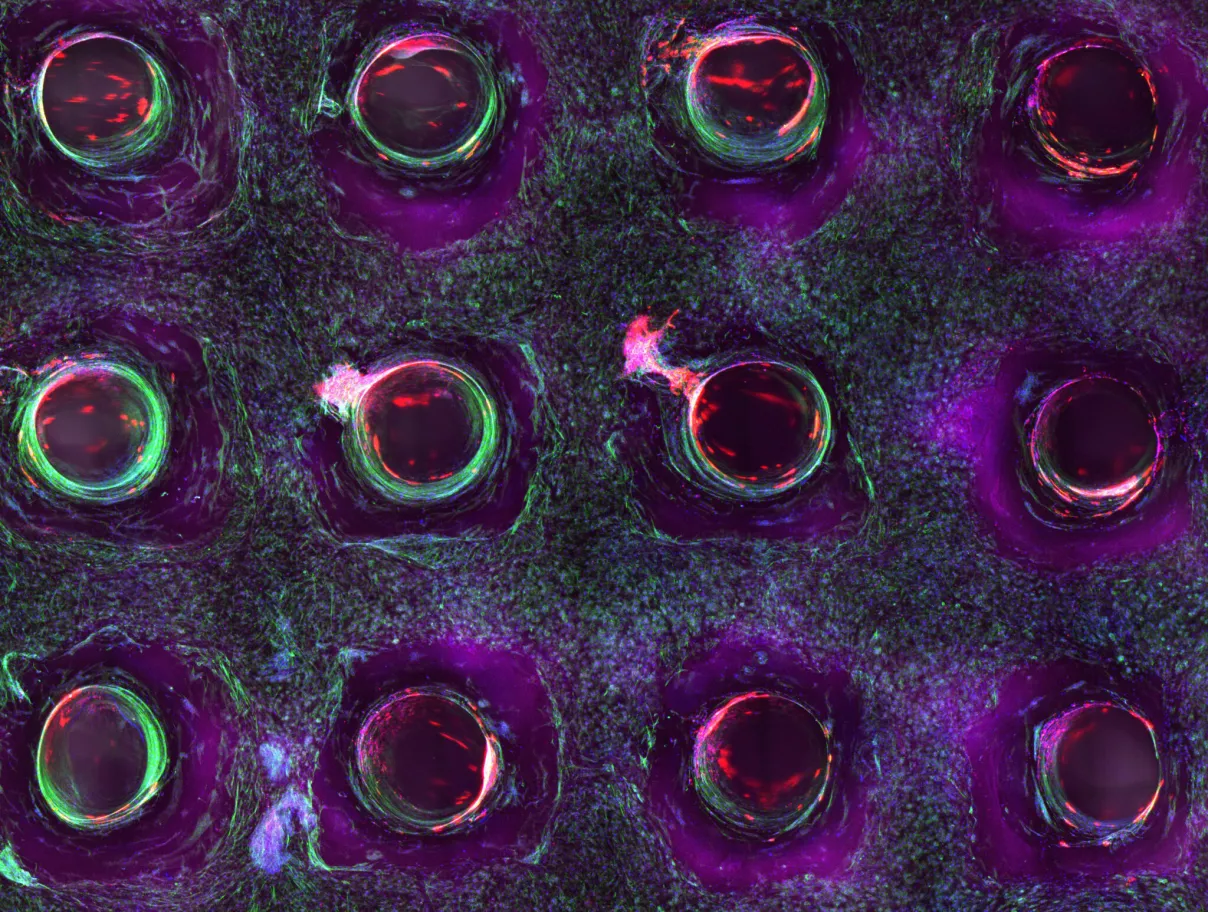

“What we’re seeing over time is much more narrow and defined, focused large language models being developed,” he said. “Think of a genetics or genomics language model. Think of one that’s just trained on claims data.” As an example, he discussed a program that Synthetica Bio helped develop, which coordinates data from millions of patients, as well as information from PubMed (an online library of medical research) and ClinicalTrials.gov.

Fan agreed that AI hallucination fears are well-founded. However, he also said that medical programs will ideally focus on data that humans can’t parse so well, while leaving the ultimate decisions to professionals. He proposed a situation where a human would have to follow 50 football games at once to gather all the relevant data.

“Can you follow it? You just can’t,” he said. “Those types of jobs are the better fit for the deep learning [models] … We are using the AI techniques that are truly trying to help the humans who make those complicated decisions. But healthcare providers should be the true decision-makers for any medical procedure.”